Solution for you by

URBAN-IT

NVIDIA Cloud GPUs for AI - Powered by URBAN PORTFOLOIO

Powered by 100% renewable energy

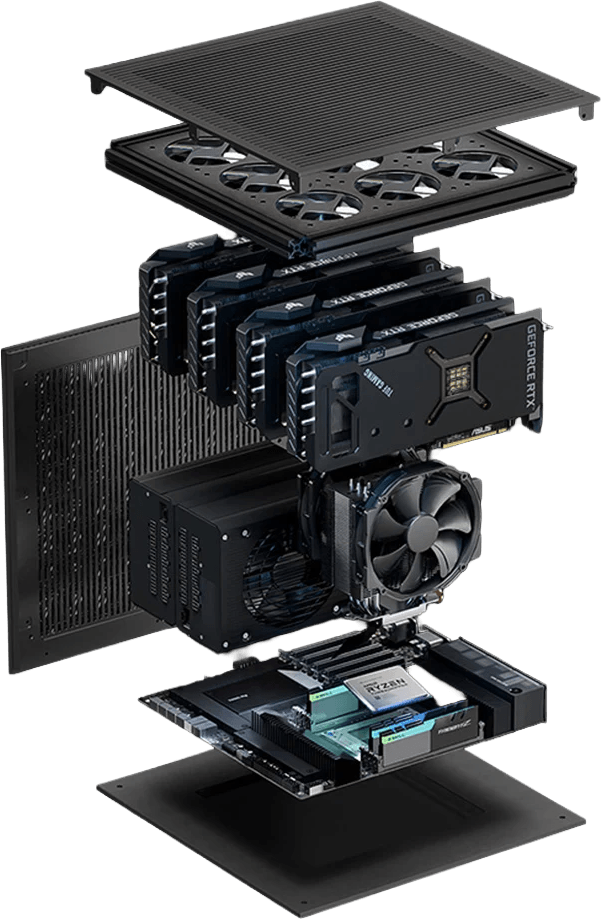

Unlock AI Potential with Cloud GPU Infrastructure

Nothing beats NVIDIA GPU performance on URBAN for artificial intelligence applications.

· Master Complex Neural Networks at Scale

Accelerate the training and deployment of sophisticated deep learning models—from transformers to generative adversarial networks (GANs)—with scalable GPU-driven infrastructure. Achieve higher model accuracy, faster convergence, and the computational capacity needed for cutting-edge AI research and production.

· Elevate AI Development Efficiency

Speed up experimentation cycles and reduce time-to-market for AI solutions. Our cloud GPU platform enables parallel training, hyperparameter optimization, and iterative refinement at unprecedented speeds, empowering your team to innovate faster and deploy smarter.

· Power Real-Time AI Inference

Deploy low-latency, high-throughput inference pipelines capable of serving real-time AI applications—whether for autonomous decision systems, interactive AI assistants, or live data analytics. Ensure seamless scalability to handle unpredictable demand spikes without compromising performance.

· Process Data-Intensive Workloads with Ease

Handle large-scale datasets essential for modern AI tasks, including natural language processing, computer vision, genomic sequencing, and multimodal AI. Our infrastructure accelerates data preprocessing, model training, and batch inference, turning raw data into actionable intelligence faster.

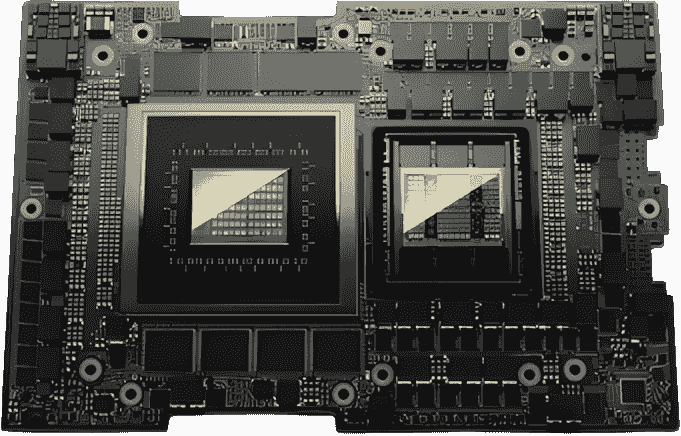

· Leverage AI-Optimized Hardware

Built on industry-leading NVIDIA GPU architectures, our cloud platform delivers dedicated AI acceleration for both training and inference. Benefit from tensor cores, optimized frameworks support, and energy-efficient performance designed specifically for demanding AI workloads.

· Scale Securely with Enterprise-Grade Infrastructure

Grow your AI initiatives without constraints. Our platform provides elastic resource scaling, integrated data security, and compliance-ready environments—so you can focus on building AI, not managing infrastructure.

Power Machine Learning with URBAN PORTFOLOIO and NVIDIA GPUs

Powered by 100% renewable energy

Optimize Machine Learning with Cloud GPU Power

Unlock Unprecedented Efficiency & Scale

· Accelerate Training & Inference at Scale

Hyperstack’s high-performance GPUs deliver massive parallel processing capability, dramatically reducing time-to-train for even the most complex models. Achieve faster iteration cycles, quicker deployment, and scalable inference that grows with your needs—all on a unified platform.

· Drive Breakthroughs in Deep Learning

Our GPUs are specifically optimized for deep neural network workloads, providing the computational intensity required for cutting-edge research and production-grade models. Empower your teams to solve previously intractable problems with higher accuracy and efficiency.

· Simplify Deployment with Containerized Workflows

Accelerate ML operations with fully containerized environments and integrated orchestration. Utilize pre-configured containers, frameworks, and pre-trained models to streamline experimentation, ensure reproducibility, and deploy seamlessly across environments—from development to production.

· Accelerate Projects with Transfer Learning

Leverage state-of-the-art pre-trained models and fine-tune them for your specific use cases. Significantly reduce development time, lower computational costs, and achieve competitive performance even with limited labeled data—maximizing both efficiency and ROI.

· Power Advanced Natural Language Processing

Build and deploy sophisticated NLP pipelines—including transformer-based models—for translation, text generation, sentiment analysis, and conversational AI. Our infrastructure delivers the throughput and memory bandwidth needed to process large-scale language data efficiently.

· Enable Real-Time Computer Vision Applications

From image classification and object detection to semantic segmentation, our GPU cloud delivers the rapid parallel processing required for high-volume image and video analytics. Support applications in autonomous systems, medical imaging, security, and beyond with low-latency, high-accuracy results.

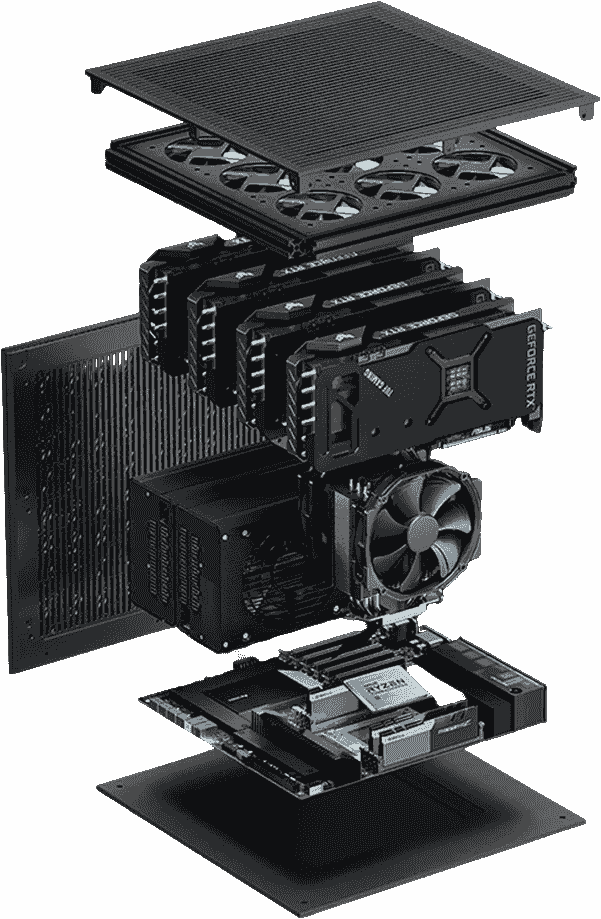

Scalable Power with Cloud GPU for High-Performance Computing on URBAN PORTFOLOIO

Powered by 100% renewable energy

Empower Discovery & Innovation with GPU-Accelerated High-Performance Computing

Designed to deliver unparalleled performance across scientific, industrial, and research applications, our infrastructure turns complex simulations and massive datasets into actionable insights—faster than ever before.

1. Advanced Scientific & Engineering Simulation

Accelerate large-scale simulations in fields such as computational fluid dynamics, finite element analysis, molecular dynamics, and astrophysical modelling. Our GPU-accelerated HPC reduces computation time from weeks to hours, enabling deeper iteration and breakthrough discoveries.

2. Accelerated Drug Discovery & Healthcare Innovation

Speed up molecular docking, genome sequencing, and medical image analysis with parallel processing power. From target identification to personalised treatment modelling, our platform helps researchers and biotech firms shorten development cycles and improve outcomes.

3. High-Fidelity Financial Modelling & Quantitative Analysis

Execute complex Monte Carlo simulations, real-time risk analysis, algorithmic trading strategies, and portfolio optimisation with millisecond latency. GPU acceleration delivers the computational throughput required for high-frequency and high-accuracy financial decision-making.

4. Precision Climate Science & Weather Forecasting

Run high-resolution climate models and numerical weather prediction systems with the computational scale needed for accurate, region-specific forecasts. Support disaster preparedness, agricultural planning, and environmental monitoring with timely, data-driven insights.

5. Large-Scale Data Analytics & AI-Ready Pipelines

Process and analyse terabytes of experimental, observational, or operational data efficiently. Our HPC environment integrates seamlessly with machine learning frameworks, enabling advanced pattern recognition, predictive modelling, and insight generation at scale.

6. Streamlined Workflow & Orchestration

- Native SLURM Integration:

Optimise resource allocation and job scheduling for batch processing and large-scale simulations, ensuring maximum cluster utilisation and efficiency.

- Kubernetes-Compatible Infrastructure:

Deploy containerised HPC and AI workloads with ease. Benefit from automated scaling, resilient orchestration, and consistent environments from development to production.

7. Scalable Infrastructure for Evolving Workloads

Whether running episodic large-scale simulations or sustaining continuous data-processing pipelines, our platform provides elastic, on-demand resources. Scale compute and memory independently, paying only for what you use—without upfront capital investment.

Power LLM with URBAN and NVIDIA GPUs

Powered by 100% renewable energy

Build & Scale the Future of Language AI with Cloud GPU

Our purpose-built GPU cloud platform delivers the computational power, scalability, and integrated tooling required to train, fine-tune, and deploy state-of-the-art LLMs efficiently.

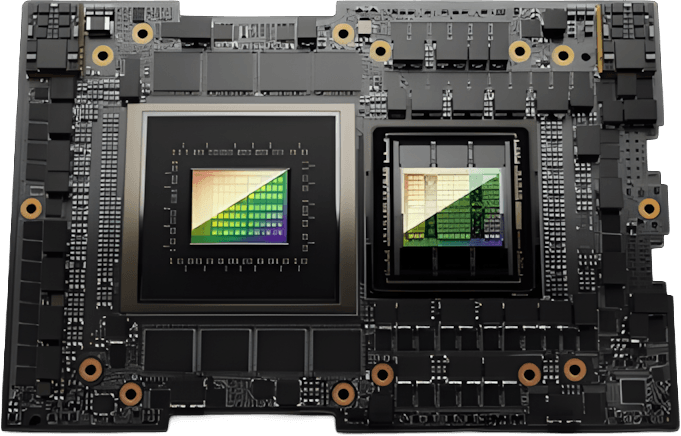

1. Unmatched Performance for LLM Training & Inference

Leverage the latest NVIDIA Tensor Core GPU architectures, optimized for transformer-based models. Achieve faster training cycles, higher throughput, and lower latency inference—whether working with foundational models or deploying fine-tuned applications in production.

2. Accelerate Development with Pre-Configured Environments

Hit the ground running with our ready-to-use LLM stacks, pre-installed with optimized frameworks, libraries, and model repositories. Reduce setup complexity and focus on innovation, not infrastructure configuration.

3. Scale Resources Dynamically, On Demand

Elastically scale GPU clusters to match your project’s evolving needs—from initial experimentation to full-scale distributed training. Eliminate the upfront cost and maintenance overhead of physical hardware while maintaining consistent performance.

4. Optimize Costs with Flexible Pricing

Adopt a pay-as-you-go model tailored to LLM workloads, ensuring you only invest in the compute you use. Ideal for research sprints, iterative fine-tuning, and production deployments with variable resource demands.

5. Streamline Team Collaboration & Model Governance

Enable seamless, secure collaboration across distributed teams with shared access to GPU resources, version-controlled environments, and centralized model registries. Maintain reproducibility and control throughout the LLM lifecycle.

6. Enterprise-Grade Security & Compliance

Train and deploy LLMs with confidence. Our platform incorporates data encryption, access controls, and compliance frameworks designed to meet enterprise and industry-specific regulatory requirements.

7. End-to-End LLM Operations Support

From data preparation and distributed training to model optimization and serving, we provide integrated tooling and expert support to streamline the entire LLM workflow—helping you move faster from concept to value.

© 2025 URBAN-IT. All rights reserved.